Data Pre-processing in ML Problem-Solving - part 2

This is the second part of a 3-part piece. These articles are notes from my self-study of Data pre-processing in ML problem solving. Major resource for study: google AI educational resources. Here is Part 1

Still on data collection; we've addressed the steps to take, how to ensure quality, how to label data, the different sources of both training and prediction data. Now we address the second part of constructing the data set:

Sampling and Splitting Data

Sampling

This involves selecting a subset of available data for training in cases where there is too much available data.

The decision on how to select that subset ultimately depends on the problem: how do we want to predict? what features do we want?

NOTE: If your data contains Personally Identifiable Information (PII), it may be important to filter it from your data e.g. to remove infrequent features. This filtering will skew your distribution because you will lose information at the tail (the part of the distribution with very low values, far from the mean). Note that the dataset will be biased towards the head queries because of the skew. Beware of it during your analysis

At serving time, you may decide to use the tail you removed.

Imbalanced Data

imbalanced: a classification dataset with skewed class proportions.

majority classes: classes that make up a huge proportion of the dataset.

minority classes: classes that make up a smaller proportion of the data set.

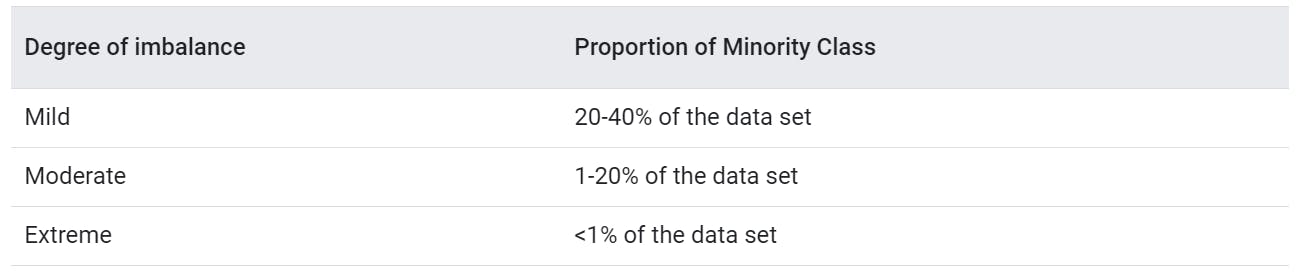

what counts as imbalanced data?

why do we look out for imbalanced data?

Because you may need to apply downsampling and upweighting.

Downsampling and Upweighting

Downsampling: training on the disproportionately low subset of the majority class data i.e extract random examples from the dominant class

Upweighting: adding an example weight to the downsampled class equal to the factor by which you downsampled. Example weights means counting an individual example more importantly during training. An example weight of 10 means the model treats the example as 10 times as important (when computing loss) as it would an example of weight 1

example weight = original example weight x downsampling factor

Consider this example of a model that detects fraud. Instances of fraud happens once in 200 transactions in the dataset, so in the true distribution, about 0.5% of the data is positive.

This is problematic because the training model will spend most of its time on negative examples and not learn enough from positive ones.

The recommendation is to first train on the true distribution

In the fraud dataset of 1 positive to 200 negatives:

- downsampling can be done by a factor of 20. So 20 positives to 200 negatives or 1 to 10 negatives. Now about 10% of our data is positive.

- we upweight the downsampled class by addding example weights(data weights) to the downsampled class. since we downsampled by a factor of 20, we add 20 weights.

Effects of Downsampling and Upweighting

- faster convergence during training because we will see the minority class more often

- Disk space management is enhanced because by consolidating the majority class into fewer examples with larger weights, we spend less disk space storing them. This savings allows more disk space for the minority class, so we can collect a greater number and a wider range of examples from that class.

- calibration is ensured through upweighting. the outputs can still be interpreted as probabilities.

Data Split Example

After sampling, the next step is split the data into:

- Training sets

- Validation sets.

- Testing sets

Often, splitting is done randomly because it is the best approach for many ML problems. However, this is not always the best solution especially for datasets in which the examples are naturally clustered into similar examples.

Consider an example in which we want our model to classify the topic from the text of a news article:

Random split will be problematic because news stories appear in clusters. multiple stories about the same topic are often published around the same time. random splitting wouldn't work because all the stories will come in at the same time, so doing the split like this would cause skew.

A simple approach to fixing this problem would be to split our data based on when the story was published, perhaps by day the story was published.

With tens of thousands or more news stories, a percentage may get divided across the days. That's okay, though; in reality these stories were split across two days of the news cycle.

Alternatively, you could throw out data within a certain distance of your cutoff to ensure you don't have any overlap. For example, you could train on stories for the month of April, and then use the second week of May as the test set, with the week gap preventing overlap

So you could collect 30 days of data, split the data by time, train on data from days 1-29 and and evaluate on data from day 30.

Splitting your Data

Time-based splits work best with very large datasets (in the order of 10s of millions of examples).

In projects with less data, the distributions end up quite different between training, validation, and testing.

You could end up with a skew, your data can be training on information it would not necessarily have access to at prediction time.

Domain knowledge can inform you how to split your data

Never train on test data. If you are seeing surprisingly good results on your evaluation metrics, it might be a sign that you are accidentally training on the test set. For example, high accuracy might indicate that test data has leaked into the training set.

Randomisation

This refers to making your data generation piepline reproducible. of your data by making sure any randomisation in your data can be made deterministic.

Say you want to add a feature to see how it affects model quality. For a fair experiment, your datasets should be identical except for this new feature. If your data generation runs are not reproducible, you can't make these datasets.

This can be applied during both sampling and splitting the data. It can be done in the following ways:

- seed your random number generators: Seeding ensures that the RNG outputs the same values in the same order each time you run it, recreating your dataset

- use invariant hash keys: hashing involves mapping data of arbitrary size to fixed-size values. The values returned by a hash function are called hash values, hash codes etc. You can hash each example, and use the resulting integer to decide in which split to place the example.

The inputs to your hash function shouldn't change each time you run the data generation program. Don't use the current time or a random number in your hash, for example, if you want to recreate your hashes on demand.

Note: In hashing, hash on query + date, which would result in a different hashing each day as opposed to hashing on just the query which could lead to:

- Your training set will see a less diverse set of queries

- Your evaluation sets will be artificially hard, because they won't overlap with your training data. In reality, at serving time, you'll have seen some of the live traffic in your training data, so your evaluation should reflect that